MPI Topology Communicators

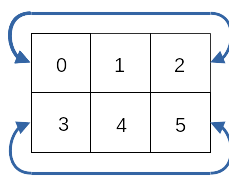

We have already discussed setting up two-dimensional grids of processors. We set up our own mathematicsl to compute the topology, the layout of nearest neighbors as a funciton of row and column. But we could save ourselves the trouble, and possible errors, by using a built-in feature of MPI called Topology Communicators.

We have learned how to create new communicators. MPI provides a specialized set of routines to generate a communicator with the row and column coordinates computed for us. As usual, it also can provide the rank relative to the new communicator.

Communication at the ends of the Cartesian block can be periodic or nonperiodic. So far we’ve only considered nonperiodic boundaries, where for example the “left” of rank - is `MPI_PROC_NULL1, as is the “right” of the rank at the end of the row. We could also wrap the domain, so that data from rank 0 goes to rank nrows-1 and vice versa.

We will just summarize the types for the subprograms related to Cartesian communciator sytnax.

C++

int ndims;

dims is a 2-d int array with elements nrows, ncols

periods is a 2-d int array with elements 0 for nonperiodic boundary along the zeroth dimension (rows in our example) and 1 for a periodic boundary along the first dimension (columns in our example). A periodic boundary wraps the identity of the neighbor to the “empty” side of a rank.

reorder: allow (1) or not (0) MPI to reorder ranks if necessary. Often not needed for simple Cartesian grids.

grid_comm: MPI_Comm, the new communicator.

grid_coords: two-dimensional int array whose values are the coordinates of the location of the ranks specified relative to the Cartesian grid of the new communicator.

MPI_Cart_create(MPI_COMM_WORLD, ndims, dims, periods, reorder, &grid_comm);

MPI_Comm_rank(grid_comm, &grid_rank);

MPI_Cart_coords(grid_comm, grid_rank, ndims, grid_coords);

MPI_Cart_shift(grid_comm, direction, displ, &up, &down);

Fortran 2008

Other than periods and reorder, the types are similar to the C++ arguments. If using the optional last ierror argument, it is integer as usual.

periods: two-dimensional logical array with values .true. (periodic) or .false.(not periodic). reorder: logical .true. or .false.

call MPI_Cart_create(MPI_COMM_WORLD, ndims, dims, periods, &

reorder, grid_comm)

call MPI_Comm_rank(grid_comm, grid_rank)

call MPI_Cart_coords(grid_comm, grid_rank, ndims, coords)

call MPI_Cart_shift(grid_comm, direction, displ, up, down)

Python

dimes: two-dimensional list, arguments nrows, ncols as for other languages. periods: two-dimensional Boolean with arguments True (periodic) or False (nonperiodic) similar to Fortran. reorder: Boolean True or False.

grid_comm=comm.Create_cart(dims, periods)

grid_rank=grid_comm.Get_rank()

grid_coords=grid_comm.coords

(left,right)=grid_comm.Shift(direction,displ)

Example

C++

#include <iostream>

#include <iomanip>

#include <mpi.h>

using namespace std;

/*#This example exchanges data among four rectangular domains with halos. Most real codes use squares, but we want to illustrate how to use different dimensions. Divide up the processes. Either we require a perfect square, or we must specify how to distribute by row/column. In a realistic program,

the process distribution (either the total, for a perfect square, or

the rows/columns) would be read in and we would need to check that the number

of processes requested is consistent with the decomposition. */

int main (int argc, char *argv[]) {

int N, M;

// Added for MPI

int nrl, ncl;

int rank, grid_rank, nprocs;

int up, down, right, left;

int tag=0;

//Initialize MPI

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD,&nprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&rank);

N = 8;

M = 12;

int rows=2;

int cols=3;

if (rows*cols != nprocs) {

cout<<"Number of rows times columns does not equal nprocs\n";

return 1;

}

if (N%rows==0 && M%cols==0) {

nrl = N/rows;

ncl = M/cols;

}

else {

cout<<"Number of ranks should divide the number of rows evenly.\n";

return 2;

}

double **w=new double*[nrl+2];

double *wptr=new double[(nrl+2)*(ncl+2)];

for (int i=0;i<nrl+2;++i,wptr+=ncl+2) {

w[i] = wptr;

}

double counter=1.;

for ( int i = 0; i <= nrl+1; i++ ) {

for (int j = 0; j <= ncl+2; j++ ) {

w[i][j] = (rank+1)*counter;

counter++;

}

}

//Set up the topology assuming processes number left to right by row

int ndims=2;

int dims[2]={rows,cols};

int periods[2]={1,0};

int reorder=0;

MPI_Comm grid_comm;

int grid_coords[2];

MPI_Cart_create(MPI_COMM_WORLD, ndims, dims, periods, reorder, &grid_comm);

MPI_Comm_rank(grid_comm, &grid_rank);

MPI_Cart_coords(grid_comm, grid_rank, ndims, grid_coords);

int direction=0;

int displ=1;

MPI_Cart_shift(grid_comm, direction, displ, &up, &down);

direction=1;

MPI_Cart_shift(grid_comm, direction, displ, &left, &right);

cout<<"topo "<<grid_rank<<" "<<up<<" "<<down<<" "<<left<<" "<<right<<endl;

//Boundary conditions

double topBC=0.;

double bottomBC=200.;

double rightBC=100.;

double leftBC=100.;

if (grid_coords[0]==0) {

for (int i=0;i<=ncl+1;++i){

w[0][i]=topBC;

}

}

if (grid_coords[0]==rows-1) {

for (int i=0;i<=ncl+1;++i){

w[nrl+1][i]=bottomBC;

}

}

if (grid_coords[1]==0) {

for (int i=0;i<=nrl+1;++i){

w[i][0]=leftBC;

}

}

if (grid_coords[1]==cols-1) {

for (int i=0;i<=nrl+1;++i){

w[i][ncl+1]=rightBC;

}

}

//Set up the MPI type for columns

MPI_Datatype col;

MPI_Type_vector(nrl,1,ncl+2,MPI_DOUBLE,&col);

MPI_Type_commit(&col);

MPI_Status status;

MPI_Sendrecv(&w[1][0],ncl+2, MPI_DOUBLE,up,tag,&w[nrl+1][0],

ncl+2, MPI_DOUBLE,down,tag,grid_comm,&status);

MPI_Sendrecv(&w[nrl][0],ncl+2,MPI_DOUBLE,down,tag,&w[0][0],

ncl+2,MPI_DOUBLE,up,tag,grid_comm,&status);

MPI_Sendrecv(&w[1][ncl],1,col,right,tag,&w[1][0],1,col,left, tag, grid_comm, &status);

MPI_Sendrecv(&w[1][1],1,col,left,tag,&w[1][ncl+1],1,col,right, tag, grid_comm, &status);

//Verification. Usually done with plots in real codes.

//Simplified from earlier examples, we'll just spot-check

//Same original values

//

double *u=new double[(nrl+2)*(ncl+2)];

int uwsize=(nrl+2)*(ncl+2);

memset(u,0.,uwsize);

int position;

MPI_Barrier(grid_comm);

if ( grid_rank == 0 ) {

MPI_Recv(u,uwsize,MPI_DOUBLE,1,1,grid_comm,&status);

cout<<"Ranks 0 and 1 Check columns"<<endl;

position=0;

for (int i=0;i<nrl+2;i++) {

for (int j=0;j<ncl+2;j++) {

cout<<w[i][j]<<" ";

}

cout<<" | ";

for (int j=0;j<ncl+2;j++) {

cout<<u[position++]<<" ";

}

cout<<endl;

}

cout<<endl;

}

if ( grid_rank == 1 ) {

position=0;

for (int i=0; i<=nrl+1; i++)

for (int j=0;j<=ncl+1; j++)

u[position++]=w[i][j];

MPI_Send(u,uwsize,MPI_DOUBLE,0,1,grid_comm);

}

MPI_Barrier(grid_comm);

//Check columns on other side

//

memset(u,0.,uwsize);

if ( grid_rank == 1 ) {

MPI_Recv(u,uwsize,MPI_DOUBLE,2,2,grid_comm,&status);

cout<<"Ranks 1 and 2 Check columns"<<endl;

position=0;

for (int i=0;i<nrl+2;i++) {

for (int j=0;j<ncl+2;j++) {

cout<<w[i][j]<<" ";

}

cout<<" | ";

for (int j=0;j<ncl+2;j++) {

cout<<u[position++]<<" ";

}

cout<<endl;

}

cout<<endl;

}

if ( grid_rank == 2 ) {

position=0;

for (int i=0; i<=nrl+1; i++)

for (int j=0;j<=ncl+1; j++)

u[position++]=w[i][j];

MPI_Send(u,uwsize,MPI_DOUBLE,1,2,grid_comm);

}

MPI_Barrier(grid_comm);

//Check periodic row exchanges

//

memset(u,0.,uwsize);

if ( grid_rank == 0 ) {

MPI_Recv(u,uwsize,MPI_DOUBLE,3,3,grid_comm,&status);

cout<<"Ranks 0 and 3 Check rows including periodic exchange"<<endl;

for (int i=0;i<nrl+2;i++) {

for (int j=0;j<ncl+2;j++) {

cout<<w[i][j]<<" ";

}

cout<<endl;

}

cout<<"-=---------------------------------------------------"<<endl;

position=0;

for (int i=0;i<nrl+2;i++) {

for (int j=0;j<ncl+2;j++) {

cout<<u[position++]<<" ";

}

cout<<endl;

}

cout<<endl;

}

if ( grid_rank == 3 ) {

position=0;

for (int i=0; i<=nrl+1; i++)

for (int j=0;j<=ncl+1; j++)

u[position++]=w[i][j];

MPI_Send(u,uwsize,MPI_DOUBLE,0,3,grid_comm);

}

MPI_Barrier(grid_comm);

//end checking section

//Type_free not really necessary but good practice

MPI_Type_free(&col);

MPI_Comm_free(&grid_comm);

MPI_Finalize();

return 0;

}

Fortran

program sendrows

use mpi_f08

double precision, allocatable, dimension(:,:) :: w

double precision, allocatable, dimension(:,:) :: u

integer :: N

integer :: i,j

integer :: nrows, ncols, nrl, ncl

integer :: rank, nprocs, tag=0

integer, dimension(2) :: dims, coords

logical, dimension(2) :: periods

logical :: reorder

integer :: ndims, grid_rank

integer :: direction, displ

type(MPI_Comm) :: grid_comm

type(MPI_Status) :: mpi_stat

type(MPI_Datatype) :: row

integer :: left, right, up, down

integer :: uwsize

!Initialize MPI, get the local number of columns

call MPI_INIT()

call MPI_COMM_SIZE(MPI_COMM_WORLD,nprocs)

call MPI_COMM_RANK(MPI_COMM_WORLD,rank)

N=8

M=12

nrows=2

ncols=3

if (nrows*ncols /= nprocs) then

call MPI_Finalize()

stop "Number of rows times columns does not equal nprocs"

endif

if (mod(N,nrows)==0 .and. mod(M,ncols)==0) then

nrl = N/nrows

ncl = M/ncols

else

call MPI_Finalize()

stop "Number of ranks should divide the number of rows evenly."

endif

!**create cartesian topology for processes

ndims=2

dims(1) = nrows ! number of rows

dims(2) = ncols ! number of columns

periods(1) = .true. ! cyclic in this direction

periods(2) = .false. ! not cyclic in this direction

reorder = .false. ! allow MPI to reorder if it sees fit

call MPI_Cart_create(MPI_COMM_WORLD, ndims, dims, periods, &

reorder, grid_comm)

call MPI_Comm_rank(grid_comm, grid_rank)

call MPI_Cart_coords(grid_comm, grid_rank, ndims, coords)

direction = 0 ! shift along the 1st index (0 or 1)

displ = 1 ! shift by 1

call MPI_Cart_shift(grid_comm, direction, displ, up, down)

direction = 1 ! shift along the 2nd index (0 or 1)

displ = 1 ! shift by 1

call MPI_Cart_shift(grid_comm, direction, displ, left, right)

!write(*,*) 'topo', grid_rank, up, down, left, right

! Set up values

allocate(w(0:nrl+1, 0:ncl+1))

w=reshape([(i,i=1,(nrl+2)*(ncl+2))],(/nrl+2,ncl+2/))

w=(grid_rank+1)*w

! Boundary conditions

topBC=0.d0

bottomBC=200.d0

edgeBC=100.d0

if (coords(1)==0) then

w(0,:)=topBC

else if (coords(1)==nrows-1) then

w(nrl+1,:)=bottomBC

endif

if (coords(2)==0) then

w(:,0)=edgeBC

else if (coords(2)==ncols-1) then

w(:,ncl+1)=edgeBC

endif

call MPI_Type_vector(ncl+2,1,nrl+2,MPI_DOUBLE_PRECISION,row)

call MPI_TYPE_COMMIT(row)

! Exchange halo values

! Send left and right

call MPI_SENDRECV(w(1:nrl,1) ,nrl,MPI_DOUBLE_PRECISION,left,tag, &

w(1:nrl,ncl+1),nrl,MPI_DOUBLE_PRECISION,right,tag, &

grid_comm,mpi_stat)

call MPI_SENDRECV(w(1:nrl,ncl) ,nrl,MPI_DOUBLE_PRECISION,right,tag, &

w(1:nrl,0) ,nrl,MPI_DOUBLE_PRECISION,left,tag, &

grid_comm,mpi_stat)

! Send up and down

call MPI_SENDRECV(w(1,0) ,1, row, up,tag, w(nrl+1,0),1, row,down,tag, &

grid_comm,mpi_stat)

call MPI_SENDRECV(w(nrl,0) ,1,row, down,tag, w(0,0) ,1,row, up,tag, &

grid_comm,mpi_stat)

! Check results

allocate(u(0:nrl+1,0:ncl+1))

uwsize=(nrl+2)*(ncl+2)

!Check columns

u=0.d0

if (rank==0) then

call MPI_Recv(u,uwsize,MPI_DOUBLE_PRECISION,1,1,grid_comm,mpi_stat)

write(*,*) "Ranks 0 and 1 check columns "

do i=0,nrl+1

write(*,'(*(f8.1))',advance='no') w(i,:)

write(*,'(a)',advance='no') "|"

write(*,'(*(f8.1))') u(i,:)

enddo

write(*,*)

endif

if (rank==1) then

call MPI_Send(w,uwsize,MPI_DOUBLE_PRECISION,0,1,grid_comm)

endif

call MPI_Barrier(grid_comm)

u=0.d0

if (rank==1) then

call MPI_Recv(u,uwsize,MPI_DOUBLE_PRECISION,2,2,grid_comm,mpi_stat)

write(*,*) "Ranks 1 and 2 check columns "

do i=0,nrl+1

write(*,'(*(f8.1))',advance='no') w(i,:)

write(*,'(a)',advance='no') "|"

write(*,'(*(f8.1))') u(i,:)

enddo

write(*,*)

endif

if (rank==2) then

call MPI_Send(w,uwsize,MPI_DOUBLE_PRECISION,1,2,grid_comm)

endif

call MPI_Barrier(grid_comm)

! Check rows including periodic exchange

u=0.d0

if (rank==0) then

call MPI_Recv(u,uwsize,MPI_DOUBLE_PRECISION,3,3,grid_comm,mpi_stat)

write(*,*) "Ranks 0 and 3 check rows including periodic exchange "

do i=0,nrl+1

write(*,'(*(f8.1))') w(i,:)

enddo

write(*,*) "--------------------------------------------------"

do i=0,nrl+1

write(*,'(*(f8.1))') u(i,:)

enddo

endif

if (rank==3) then

call MPI_Send(w,uwsize,MPI_DOUBLE_PRECISION,0,3,grid_comm)

endif

call MPI_Barrier(grid_comm)

call MPI_Type_free(row)

call MPI_Comm_free(grid_comm)

call MPI_Finalize()

end program

Python

import sys

import numpy as np

from mpi4py import MPI

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

nprocs = comm.Get_size()

#This example exchanges data among four rectangular domains with halos.

#Most real codes use squares, but we want to illustrate how to use different

#dimensions.

#Divide up the processes. Either we require a perfect square, or we

#must specify how to distribute by row/column. In a realistic program,

#the process distribution (either the total, for a perfect square, or

#the rows/columns) would be read in and we would need to check that the number

#of processes requested is consistent with the decomposition.

N = 8

M = 12

nrows=2

ncols=3

if nrows*ncols != nprocs:

print("Number of rows times columns does not equal nprocs")

sys.exit()

if N%nrows==0 and M%ncols==0:

nrl = N//nrows

ncl = M//ncols

else:

print("Number of ranks should divide the number of rows evenly.")

sys.exit()

#Set up the topology

ndims=2

dims=[nrows, ncols]

periods=[True, False]

grid_comm=comm.Create_cart(dims, periods)

grid_rank=grid_comm.Get_rank()

grid_coords=grid_comm.coords

direction=0

displ=1

(up,down)=grid_comm.Shift(direction, displ)

direction=1

(left,right)=grid_comm.Shift(direction,displ)

#print("Topo {:d} {:} {:d} {:d} {:d}".format(grid_rank,left,right,up,down))

w = np.zeros((nrl+2, ncl+2), dtype=np.double)

#Arbitrary values.

w[:,:] = np.reshape(np.arange(1,(nrl+2)*(ncl+2)+1),(nrl+2,ncl+2))

w=w*(rank+1)

#Bouncary conditions

topBC=0.

bottomBC=200.

edgeBC=100.

if grid_coords[0]==0:

w[0,:]=topBC

if grid_coords[0]==nrows-1:

w[nrl+1,:]=bottomBC

if grid_coords[1]==0:

w[:,0]=edgeBC

if grid_coords[1]==ncols-1:

w[:,ncl+1]=edgeBC

# set up MPI type for left column

column_zero=MPI.DOUBLE.Create_subarray([nrl+2,ncl+2],[nrl,1],[1,0])

column_zero.Commit()

column_one=MPI.DOUBLE.Create_subarray([nrl+2,ncl+2],[nrl,1],[1,1])

column_one.Commit()

column_end=MPI.DOUBLE.Create_subarray([nrl+2,ncl+2],[nrl,1],[1,ncl])

column_end.Commit()

column_endbc=MPI.DOUBLE.Create_subarray([nrl+2,ncl+2],[nrl,1],[1,ncl+1])

column_endbc.Commit()

tag=0

# sending up and receiving down

grid_comm.Sendrecv([w[1,0:ncl+2],MPI.DOUBLE], up, tag, [w[nrl+1,0:ncl+2],MPI.DOUBLE], down, tag)

# sending down and receiving up

grid_comm.Sendrecv([w[nrl,0:ncl+2],MPI.DOUBLE], down, tag, [w[0,0:ncl+2],MPI.DOUBLE], up, tag)

# sending left and right

grid_comm.Sendrecv([w,1,column_one], left, tag, [w,1,column_endbc], right, tag)

grid_comm.Sendrecv([w,1,column_end], right, tag, [w,1,column_zero], left, tag)

#Results

status=MPI.Status()

#Check row exchange

u=np.zeros_like(w)

uwsize=(nrl+2)*(ncl+2)

grid_comm.Barrier()

if rank==0:

grid_comm.Recv([u,MPI.DOUBLE],source=1,tag=1,status=status)

print("Ranks 0 and 1 check columns")

for i in range(nrl+2):

print(w[i,:],end='')

print(" | ",end='')

print(u[i,:])

print()

if rank==1:

grid_comm.Send([w,MPI.DOUBLE],dest=0,tag=1)

grid_comm.Barrier()

u[:,:]=0.0

if rank==1:

grid_comm.Recv([u,MPI.DOUBLE],source=2,tag=2,status=status)

print("Ranks 1 and 2 check columns")

for i in range(nrl+2):

print(w[i,:],end='')

print(" | ",end='')

print(u[i,:])

print()

if rank==2:

grid_comm.Send([w,MPI.DOUBLE],dest=1,tag=2)

#Checkrows including periodic

grid_comm.Barrier()

u[:,:]=0.0

if rank==0:

grid_comm.Recv([u,MPI.DOUBLE],source=3,tag=3,status=status)

print("Ranks 0 and 3 check rows including periodic exchange")

for i in range(nrl+2):

print(w[i,:])

print('-----------------------------------------')

for i in range(nrl+2):

print(u[i,:])

if rank==3:

grid_comm.Send([w,MPI.DOUBLE],dest=0,tag=3)