Deep Learning

Deep learning is a branch of artificial intelligence, where programs use multiple layers of neural networks to transform a set of input values to output values.

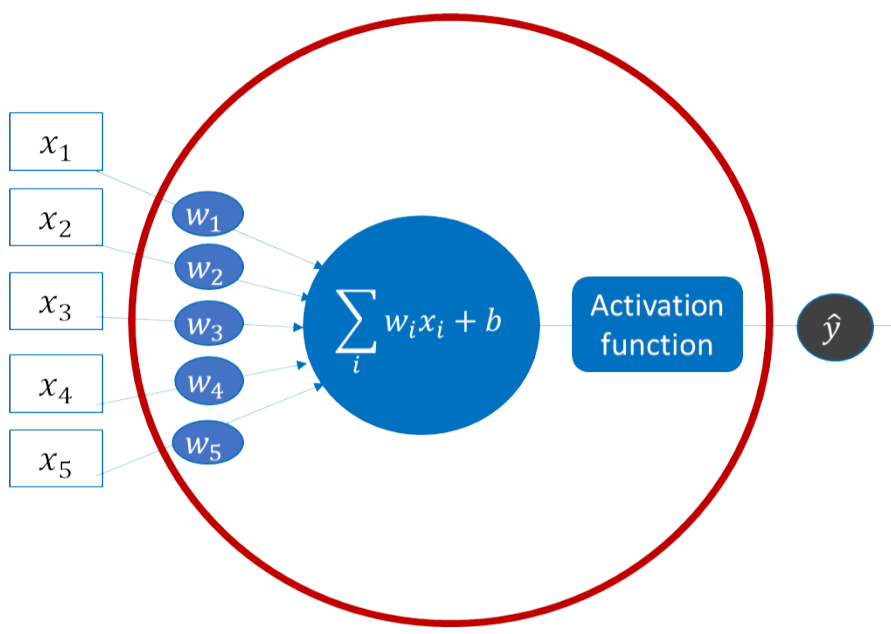

Nodes

Each “node” in the neural network performs a set of computations.

The weights, $𝑤_𝑖$, and the bias, $b$, are not known. Each node will have its own set of unknown values. During training, the “best” set of weights are determined that will generate a value close to $y$ for the collection of inputs $𝑥_𝑖$.

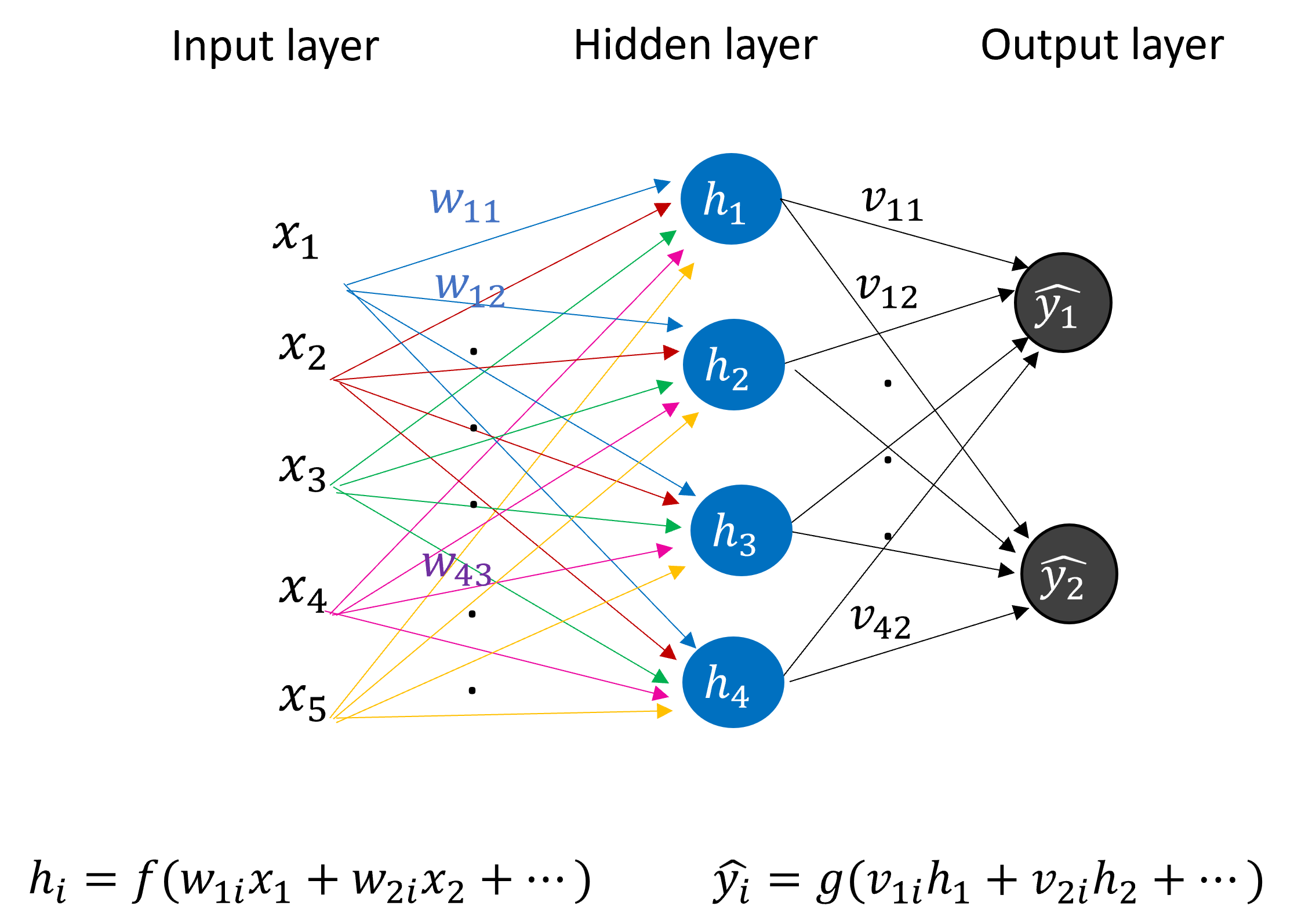

Network of Neurons

- Different computations with different weights can be performed to produce different outputs.

- This is called a feedforward network – all values progress from the input to the output.

- A neural network has a single hidden layer

- A network with two or more hidden layers is called a “deep neural network”.

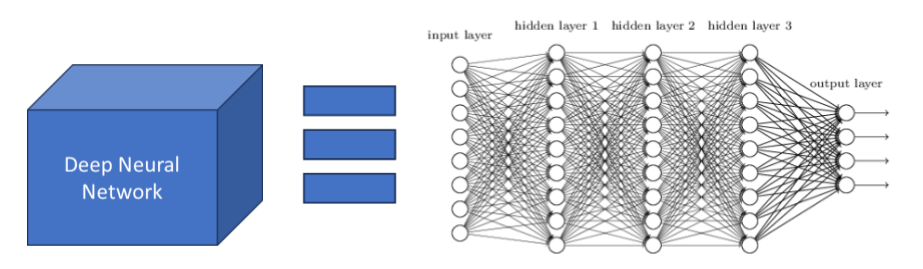

Deep Learning Neural Network

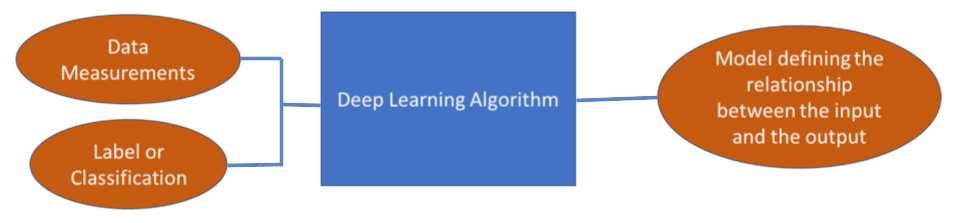

DL Algorithm

During the training or “fitting” process, the Deep Learning algorithm is fed a set of measurements/features and the expected outcome (e.g., a label or classification).

The algorithm determines the best weights and biases for the data.

How Does the Machine Learn?

- Start with a random guess for the weights and biases.

- The output values or “predicted” values of the network can be compared with the expected results/categories/labels.

- Another function, called a “loss” or “cost” function can be used to determine the overall error of the model.

- That error can be used to work backwards through the network and tweak the weights/biases.

- This step is called backward propagation.

- That error can be used to work backwards through the network and tweak the weights/biases.

Overview of the Learning Process

Deep Neural Network

DNN Examples

- Feed-Forward NN

- Consist of an input layer, an output layer and many hidden layers that are fully connected, and can be used to build speech-recognition, image-recognition, and machine-translation software.

- Recurrent NN

- RNNs are commonly used for image captioning, time-series analysis, natural-language processing, machine translation, etc.

- Convolution NN

- Consist of multiple layers and are mainly used for image processing and object detection.

- And many more such as RBFNs, GANs, Modular NN, etc.

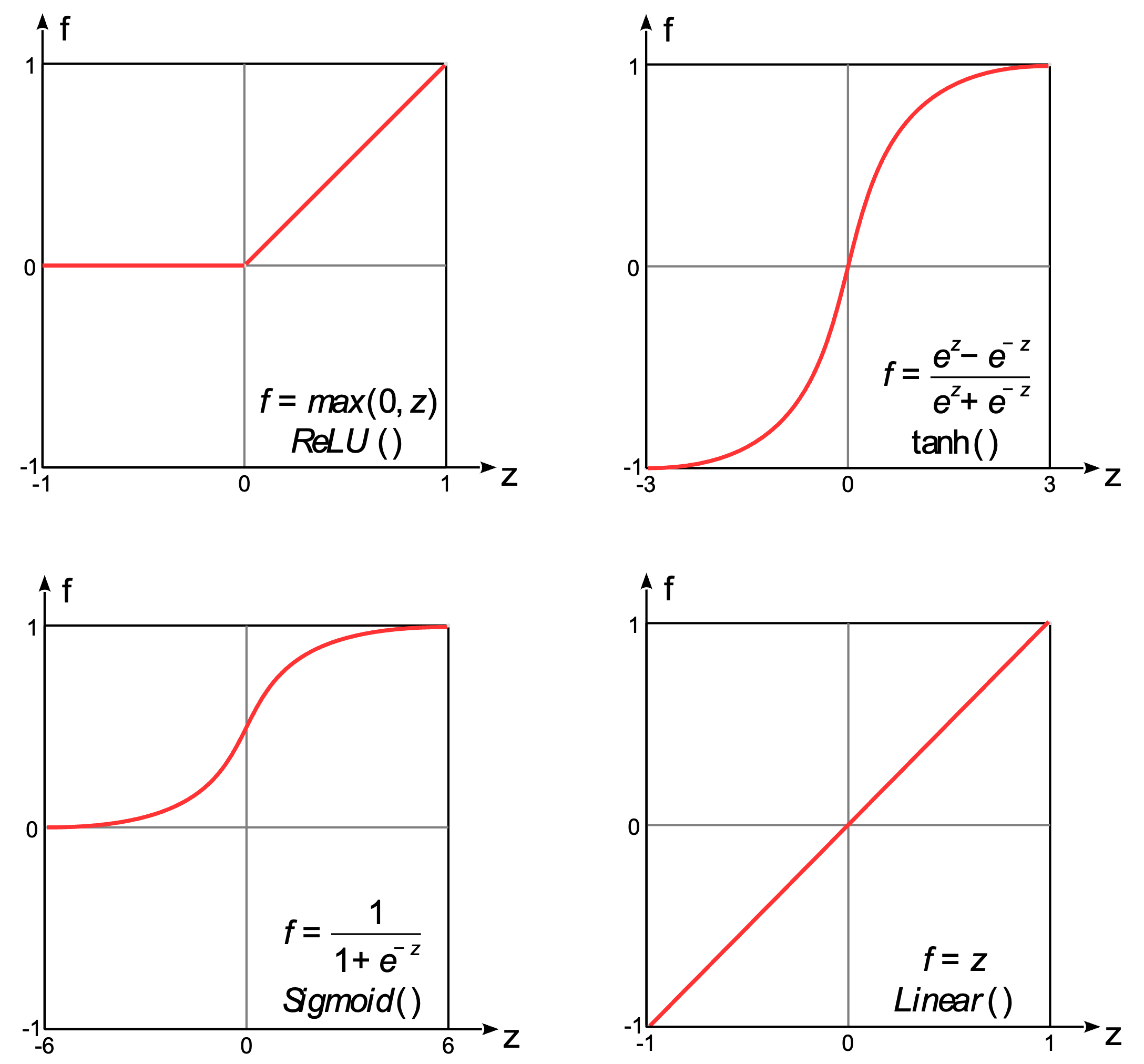

Activation Function

- The activation function introduces nonlinearity into the model.

- Can choose different activation functions for each layer.

- Examples include ReLU, Sigmoid(binary classification), and Softmax(multiclass classification).

- A complete list is available at

Loss Function

- The loss function tells us how good our model is at relating the input to the output.

- A function that will be optimized to improve the performance of the model.

- The cost value is the difference between the neural nets predicted output and the actual output from a set of labeled training data.

- The choice of loss function is based on the task.

- Examples include

- Classification: BCELoss (Binary Cross Entropy) and Cross Entropy Loss.

- Regression: Mean Squared Error (MSE)

- A complete list is available at https://pytorch.org/docs/stable/nn.html#loss-functions and https://www.tensorflow.org/api_docs/python/tf/keras/losses

Optimizer Function

- The optimizer function is a function for tweaking/adjusting the parameters during training so that the best weights and biases are efficiently reached.

- Examples include SGD, Adam, and RMSprop.

- A complete list is available at https://pytorch.org/docs/stable/optim.html?highlight=optimizer#torch.optim.Optimizer and https://www.tensorflow.org/api_docs/python/tf/keras/optimizers