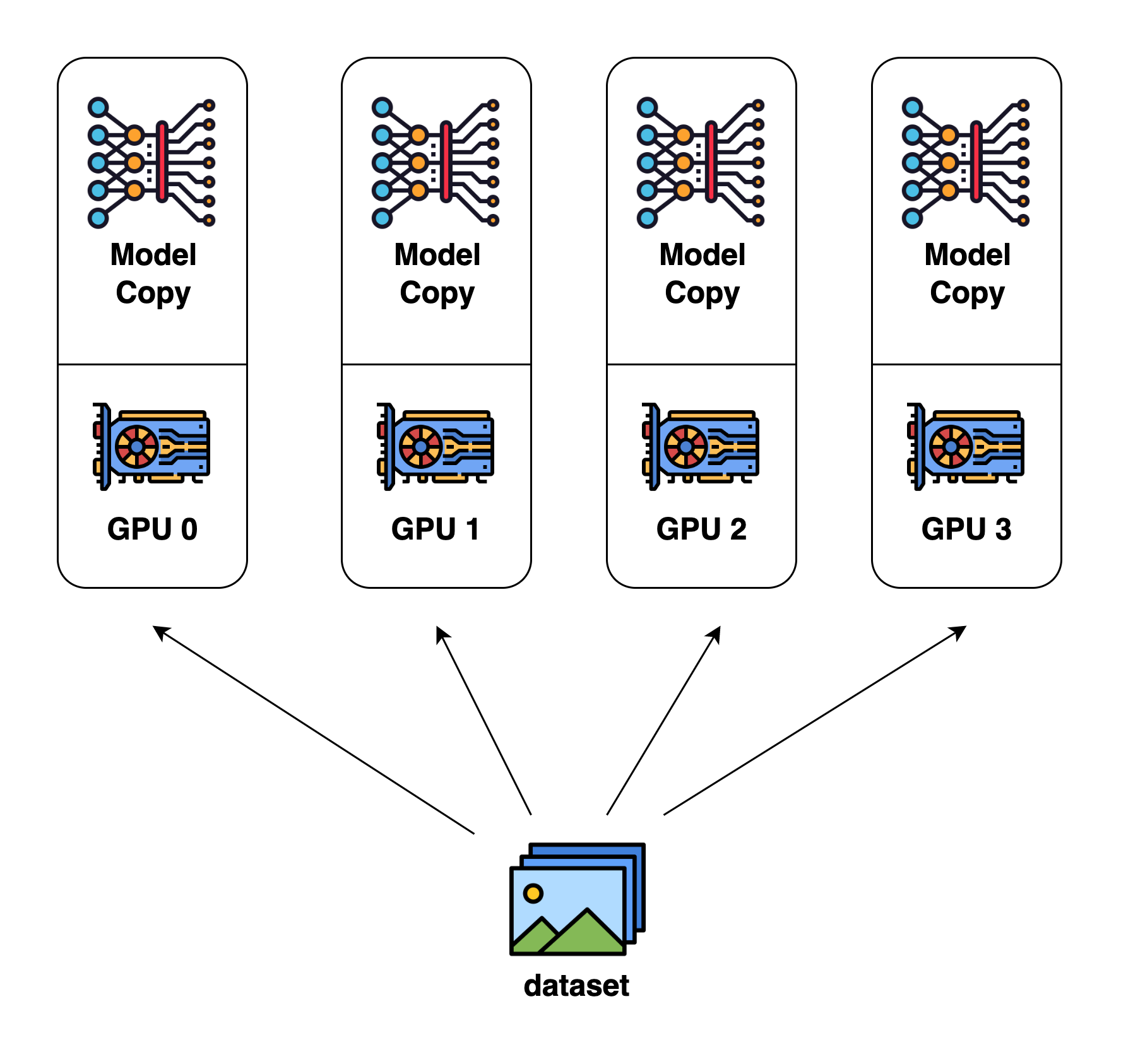

Data Parallelism

In data parallelism, each GPU contains a full copy of the model.

Data is split across GPUs, and inference is performed on GPUs in parallel. This parallelism provides inference speed up.

Source: https://colossalai.org/docs/concepts/paradigms_of_parallelism/#data-parallel