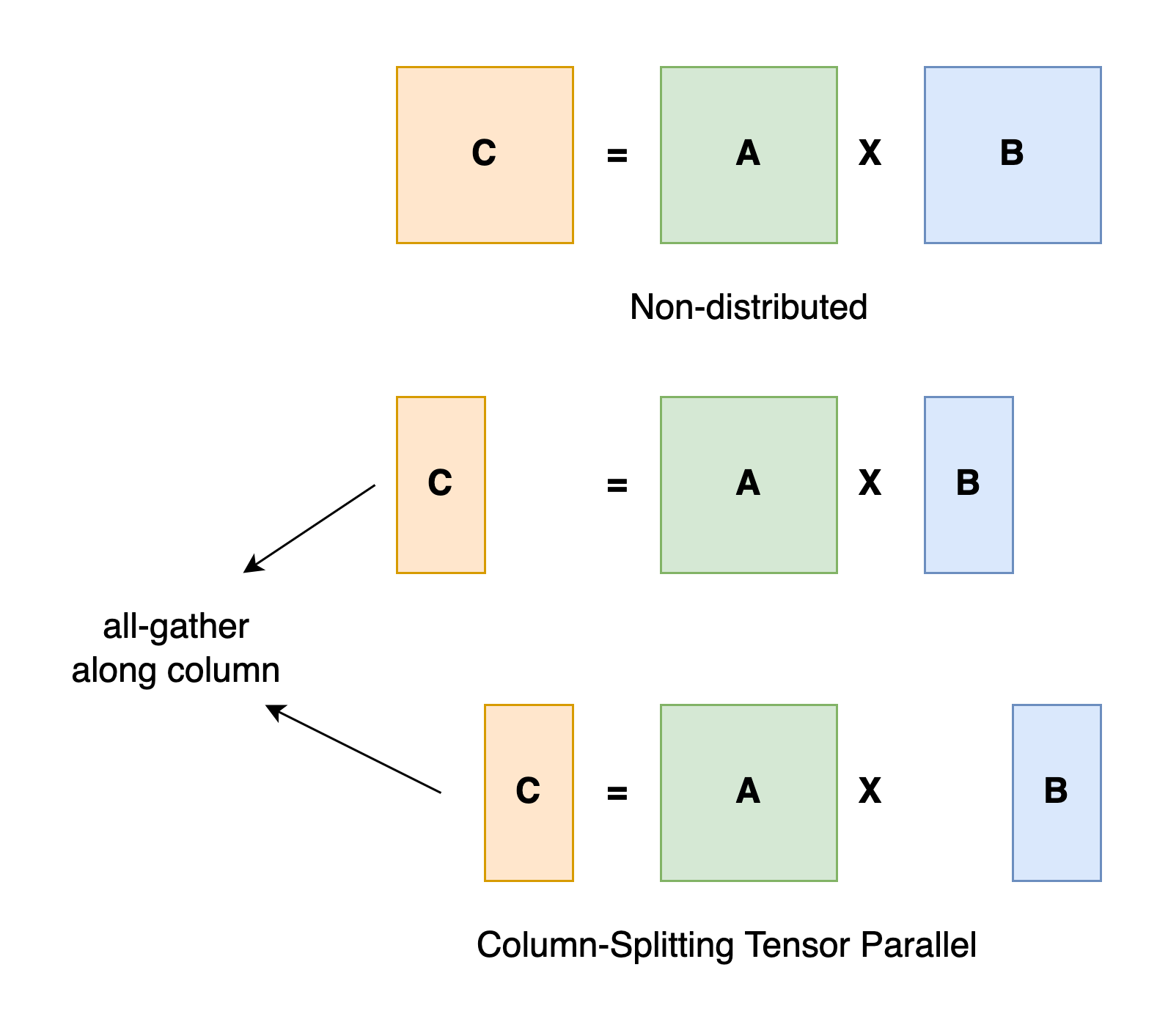

Tensor Parallelism (Intra-Layer)

Tensor parallelism (intra-layer) splits tensor computations across GPUs. Each GPU contains part of the tensor. GPUs compute on their part in parallel. THis is good for transformer layers. However, it requires frequent communication/fast interconnect (e.g. NVLink, which UVA HPC has in the BasePOD).

Source: https://colossalai.org/docs/concepts/paradigms_of_parallelism/#tensor-parallel