Random Forest

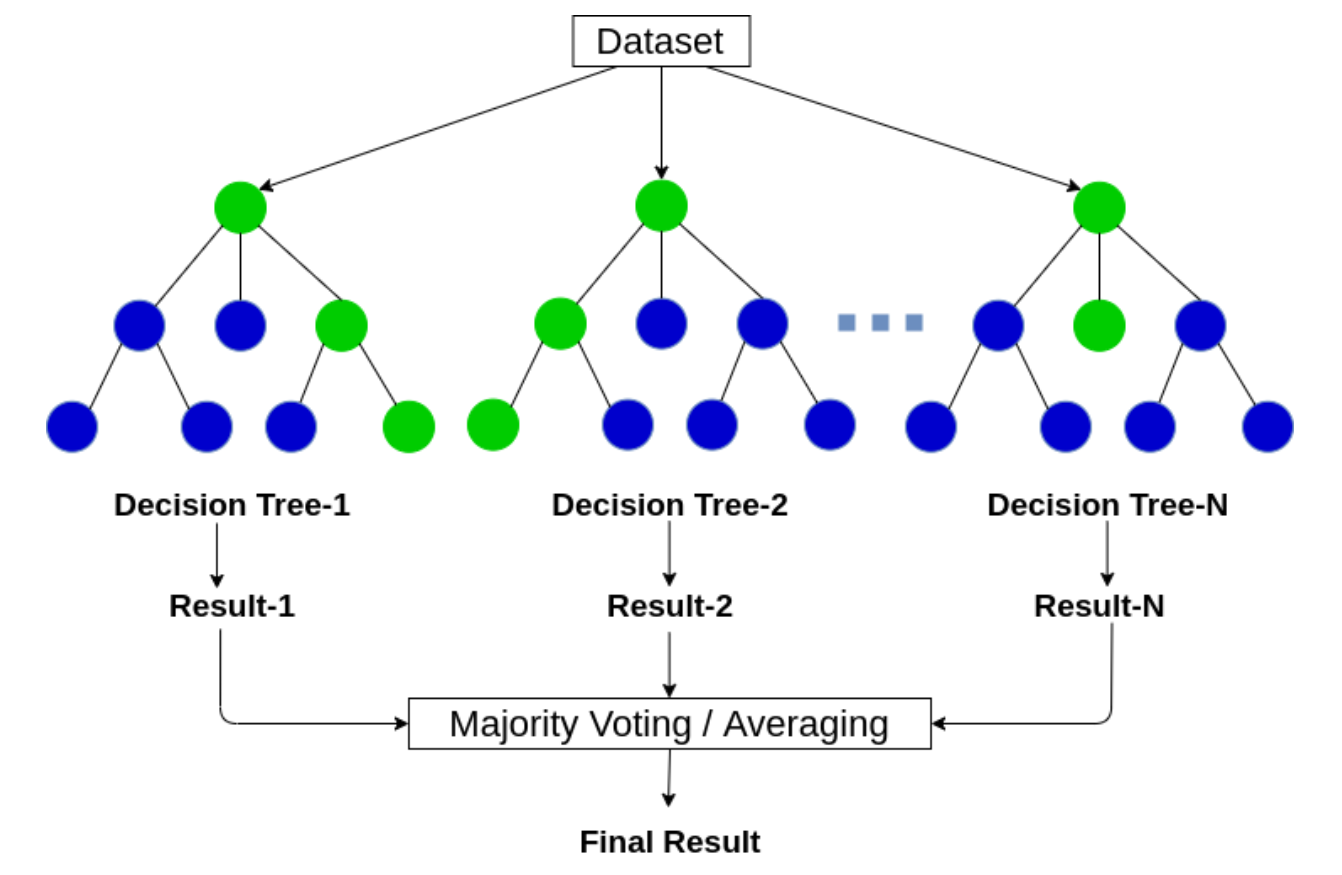

Random forest is a classification algorithm within supervised learning. It uses an Ensemble Technique.

- Ensemble techniques combine a group of “weaker” learning techniques to build a stronger technique.

- Random Forest combines the results of multiple decision trees to create a more robust result.

Random Forest: How does it work?

Different decision tree algorithms can produce different results.The random forest aggregates the decisions from the trees to determine an overall solution.

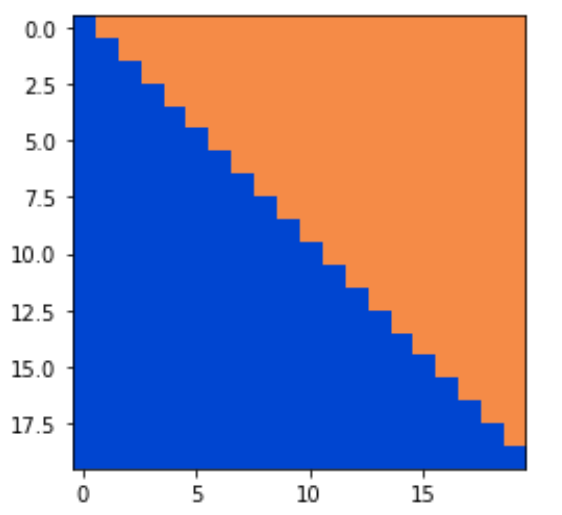

Suppose that the data fall into one of two categories (blue or orange) depending on two values, x and y, as shown in this figure:

A decision tree could choose a relationship between x, y, and the categories that matches one of the following figures:

By combining the many, many outcomes, the random forest can approach the desired mapping.

Random Forests can use different techniques for selecting features for computing each decision value. This can lead to the choice of different features.

Random Forest: Feature Importance

- We would like to know the “importance” of the features (e.g., which features are the most important for making decisions).

- Different algorithms use various metrics to determine the importance of the features.

The value of the measurements are not as important as the order of the features.