Optimizing LLMs with Fine-Tuning

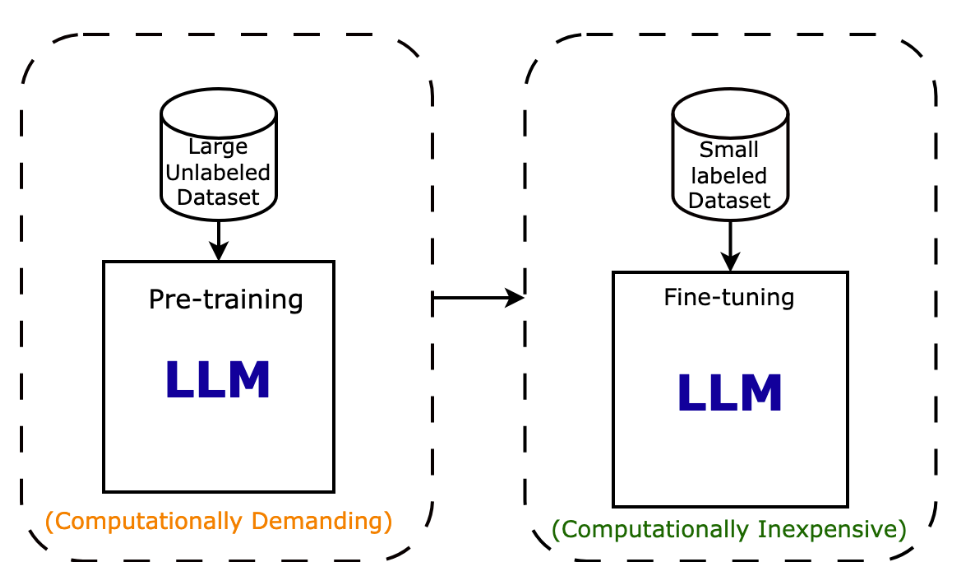

Fine-tuning builds on a pre-trained language model (LLM) by using a smaller, labeled dataset to specialize and improve its performance for a specific task. Pre-training requires a large dataset and is computationally demanding, but fine-tuning is much less resource-intensive and allows models to adapt to domain-specific needs efficiently.

Example:

distilbert / distilbert -base-uncased was pre-trained on BookCorpus and English Wikipedia, ~25GB of data

distilbert /distilbert-base-uncased-finetuned-sst-2-english was fine-tuned on Stanford Sentiment Treebank (sst2), ~5MB of data